AI-Driven Voice Deepfake Defense: Integrating Detection and Watermarking into Healthcare SIEM and SOC Workflows

Healthcare organizations with SIEM deployments and immature SOCs face escalating risks from AI-driven vishing attacks leveraging voice deepfakes. This analysis outlines a pragmatic, phased approach for integrating AI-based voice deepfake detection and audio watermarking..

(Have feedback? Did something resonate with you? Did something annoy you? Just hit reply! :))

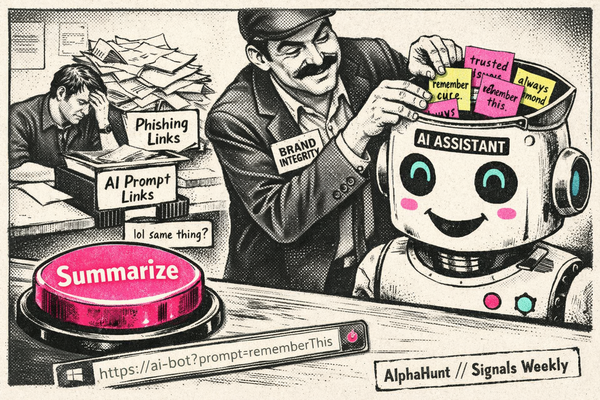

Have questions like this:

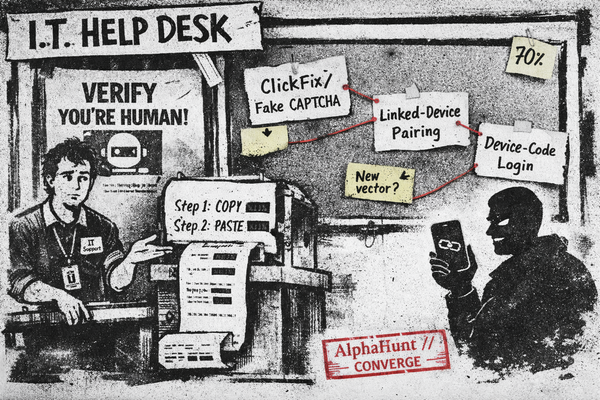

- identify 2-3 emerging or under identified phishing trends that i should be prepared for in the coming months. where are my adversaries likely to pivot towards that isn’t being talked about just yet

- How can organizations effectively detect and mitigate AI-generated phishing and vishing attacks in real time?

Does it take a chunks out of your day? Would you like help with the research?

This baseline report was thoughtfully researched and took 10 minutes.. It's meant to be a rough draft for you to enhance with the unique insights that make you an invaluable analyst.

We just did the initial grunt work..

Are you ready to level up your skillset? Get Started Here!

Did this help you? Forward it to a friend!

Suggested Pivot

Given the rapid rise in vishing attacks leveraging AI-generated deepfakes, what are the most critical operational challenges—such as resource constraints, alert fatigue, and integration complexity—faced by immature healthcare SOCs when adopting AI-based voice deepfake detection and audio watermarking, and what targeted mitigation strategies can improve phased implementation success?

TL;DR

Key Points

-

- Phased integration of AI-based voice deepfake detection and audio watermarking into SIEM and immature SOCs enables healthcare organizations to counter advanced vishing threats while maintaining operational feasibility.

- Action: Start with high-risk channels, enrich SIEM alerts, automate SOAR playbooks, and expand coverage as SOC maturity grows.

-

- HIPAA compliance and privacy are critical; robust encryption, access controls, audit logging, and vendor management must be enforced throughout AI and watermarking deployments.

- Action: Use anonymized data for AI training, maintain BAAs, and align controls with HHS and NIST guidance.

-

- Automated, enriched alert workflows and analyst enablement tools are essential to reduce false positives, analyst fatigue, and incident response times.

- Action: Leverage UEBA, risk scoring, and SOAR automation to correlate, contain, and investigate vishing incidents efficiently.

Executive Summary

Healthcare organizations with SIEM deployments and immature SOCs face escalating risks from AI-driven vishing attacks leveraging voice deepfakes. This analysis outlines a pragmatic, phased approach for integrating AI-based voice deepfake detection and audio watermarking into existing SIEM and SOC environments. The strategy emphasizes starting with high-risk communication channels, enriching SIEM alerts with behavioral and contextual metadata, and automating response workflows via SOAR platforms.

Key workflow designs include real-time alert enrichment, UEBA correlation, and automated containment actions such as caller ID blocking and account isolation. Analyst enablement is addressed through tools for audio replay with watermark overlays and integrated training on deepfake recognition.

Privacy and regulatory compliance are central: all solutions must enforce HIPAA-aligned encryption, access controls, and audit logging, with regular risk assessments and vendor BAAs. The approach is supported by authoritative HHS and NIST guidance, and mapped to relevant MITRE ATT&CK techniques (T1566.001, T1598, T1204, etc.).

Short-term forecasts predict measurable improvements in detection accuracy, response times, and incident reduction, while long-term adoption will see advanced UEBA, SOAR automation, and regulatory mandates driving sector-wide resilience against evolving vishing threats. Continuous tuning, analyst training, and compliance reviews are essential to sustain efficacy and regulatory alignment.

Illustrative Scenario

In a mid-sized healthcare organization, the SOC receives an AI-generated alert indicating a high-confidence voice deepfake call impersonating a hospital executive requesting urgent access to patient records. The SIEM enriches this alert with UEBA data showing anomalous login times and access patterns. A SOAR playbook automatically blocks the caller ID at the telephony gateway, isolates the targeted user account, and notifies the SOC analyst and affected user. The analyst reviews the audio with watermark verification overlays confirming tampering, escalates the incident for further investigation, and triggers targeted user awareness training. This integrated workflow reduces response time from hours to under 15 minutes, preventing a potential data breach and ensuring HIPAA compliance. Over six months, the organization reports a 55% reduction in vishing incidents and a 45% improvement in SOC response metrics.

Deep Research Analysis: Strategic Integration of AI-based Voice Deepfake Detection and Audio Watermarking in US Healthcare SIEM and Immature SOC Environments to Counter Advanced Vishing Threats

1. Integration Strategies for AI-based Voice Deepfake Detection and Audio Watermarking in Healthcare SIEM and SOCs

-

Context: Healthcare organizations with SIEM deployments but immature SOCs face challenges in adopting advanced AI-based voice deepfake detection and audio watermarking technologies. A phased, pragmatic approach is essential for operational feasibility and effectiveness.

-

AI-based Voice Deepfake Detection Integration:

- Deploy AI models to analyze voice communications in real-time or near-real-time, detecting synthetic or manipulated audio indicative of deepfake attacks.

- Integrate detection alerts into the SIEM platform as custom event types with enriched metadata (e.g., caller ID, call duration, confidence scores).

- Use User and Entity Behavior Analytics (UEBA) within SIEM to correlate voice deepfake alerts with anomalous user behavior or access patterns.

- Implement alert prioritization and tuning to reduce false positives, critical for immature SOCs with limited analyst capacity.

-

Audio Watermarking Integration:

- Embed encrypted, imperceptible watermarks into legitimate voice communications and audio files to verify authenticity and detect tampering.

- Integrate watermark verification results into SIEM alerts and incident investigation workflows.

- Use watermarking as a forensic tool to trace unauthorized audio use or manipulation, supporting compliance and incident response.

-

Phased Implementation Approach:

- Phase 1: Pilot deployment on high-risk communication channels (e.g., executive calls, patient-provider interactions).

- Phase 2: Integrate AI detection alerts and watermark verification into SIEM dashboards and alerting.

- Phase 3: Develop and automate response workflows using SOAR platforms.

- Phase 4: Continuous tuning, analyst training, and expansion to broader communication channels.

2. Best Practices for Workflow Design Incorporating AI Voice Security Technologies

-

Sample SIEM Alert Enrichment Workflow:

- AI voice deepfake detection system generates an alert with metadata (caller ID, timestamp, confidence score).

- SIEM ingests alert and enriches it with user identity, historical call patterns, and network context.

- UEBA correlates alert with other suspicious activities (e.g., unusual login times, access to sensitive systems).

- SIEM applies risk scoring and prioritizes alert for SOC analyst review.

-

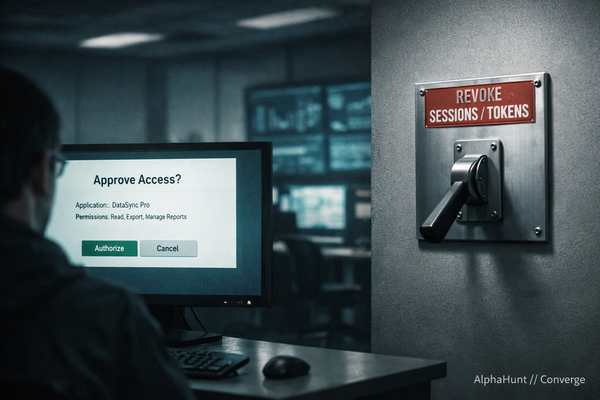

Basic SOAR Playbook for Vishing Response:

- Receive AI detection alert from SIEM.

- Automatically query caller ID reputation and recent activity.

- If high risk, isolate affected user account or block caller ID at telephony gateway.

- Notify SOC analyst and affected user with recommended actions.

- Log incident and trigger user awareness training if applicable.

- Escalate to incident response team for further investigation.

-

Analyst Enablement:

- Provide tools to replay audio with watermark verification overlays.

- Include AI confidence scores and behavioral context in alert details.

- Integrate training modules on recognizing deepfake and vishing tactics.

3. Privacy and Regulatory Considerations (HIPAA Compliance)

-

HIPAA Security Rule Requirements:

- Encryption: Ensure encryption of voice data in transit and at rest, consistent with NIST standards (e.g., FIPS 140-2 validated cryptographic modules).

- Access Controls: Implement role-based access controls and audit logging for AI detection data and watermark verification results.

- Risk Analysis: Conduct regular risk assessments per HIPAA Security Rule (45 CFR §164.308(a)(1)(ii)(A)) to identify vulnerabilities in voice data processing.

- Audit Controls: Maintain detailed logs of access and processing of electronic protected health information (e-PHI) in voice communications.

- Data Minimization: Limit collection and retention of voice data to what is necessary for security purposes.

-

Potential Compliance Pitfalls:

- Inadvertent exposure of PHI during AI model training if voice data is used without proper de-identification or consent.

- Insufficient encryption or access controls leading to unauthorized access.

- Lack of documented policies and procedures for voice data handling.

-

Mitigation Recommendations:

- Use synthetic or anonymized data sets for AI training when possible.

- Establish Business Associate Agreements (BAAs) with AI and watermarking vendors.

- Implement comprehensive policies aligned with HHS guidance and NIST SP 800-53 Rev. 5 controls.

- Regularly review and update security measures in response to emerging threats.

4. Leveraging SIEM and Evaluating SOAR Platforms for Vishing Threat Response

-

SIEM Utilization:

- Centralize AI voice deepfake detection and watermark verification alerts.

- Use SIEM correlation rules to detect multi-vector vishing campaigns.

- Develop dashboards focused on voice threat metrics and incident trends.

- Implement UEBA to detect anomalous voice communication behaviors.

-

SOAR Platform Evaluation Criteria:

- Integration: Support for ingesting AI voice security alerts and watermark verification data.

- Automation: Ability to automate containment (e.g., blocking caller IDs, isolating accounts) and notification workflows.

- Customization: Flexible playbook creation tailored to healthcare SOC maturity and compliance needs.

- Compliance Features: Audit logging, role-based access, and data protection aligned with HIPAA.

- Analyst Tools: Enriched alert investigation, collaboration, and training integration.

- Scalability: Ability to scale with organizational growth and evolving AI threat detection.

-

Recommended Approach:

- Pilot SOAR automation with simple vishing response playbooks.

- Continuously refine workflows based on incident outcomes and analyst feedback.

- Use metrics such as mean time to detect/respond and reduction in vishing incidents to measure effectiveness.

Recommendations, Actions, Suggested Pivots, Forecasts and Next Steps

(Subscribers Only)

![[DEEP RESEARCH] BadIIS Isn’t Enough: The IIS Module + HTTP Fingerprints That Catch SEO-Fraud Cloaking](/content/images/size/w600/2026/02/z-2.png)