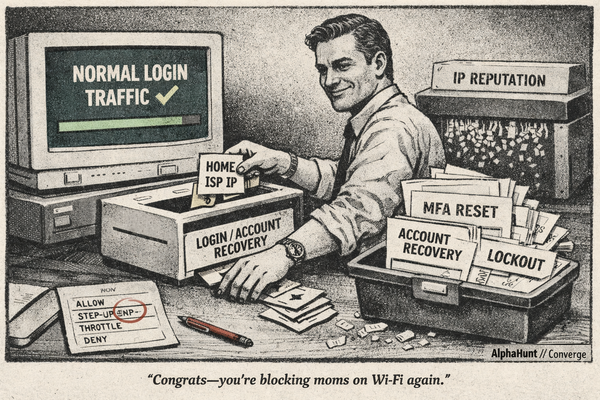

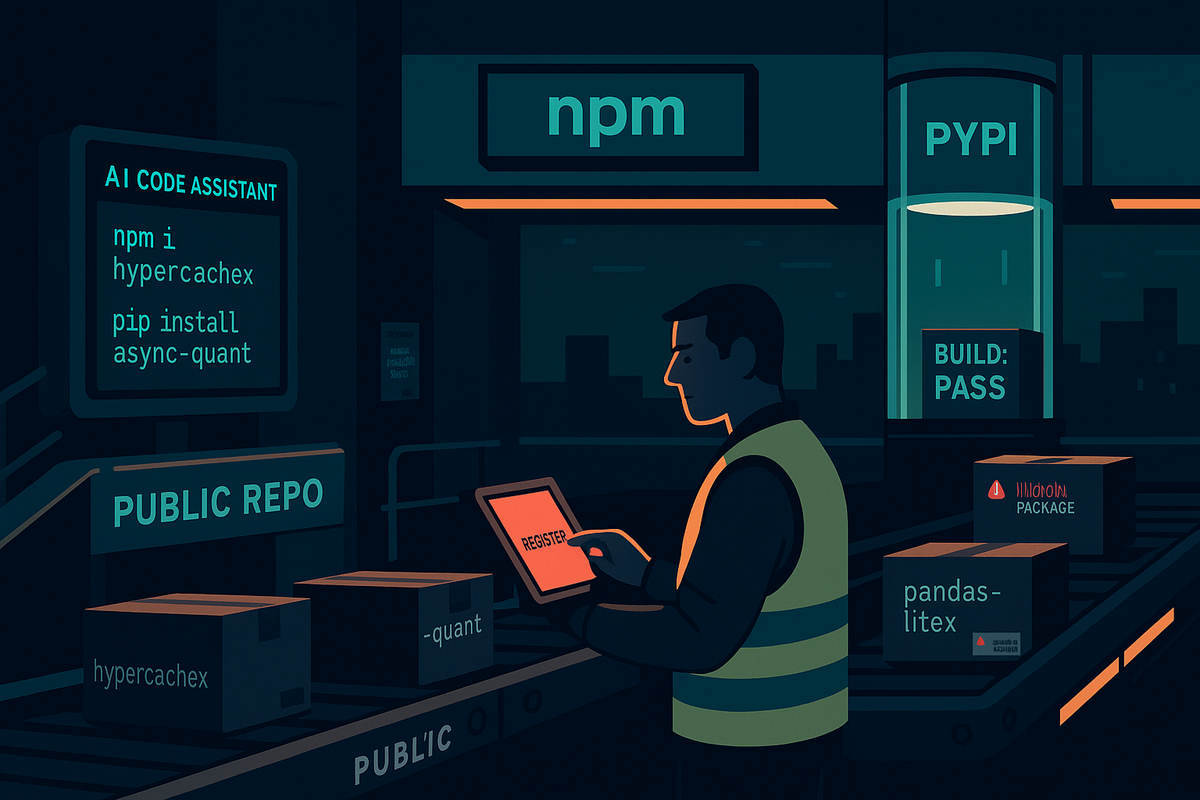

Slopsquatting: AI Hallucinations Fueling a New Class of Software Supply Chain Attacks

Your code assistant invents a “helpful” package; an attacker registers it; your pipeline installs it. As of Aug 27, 2025, this is moving from edge case to repeatable tactic. Here’s how to spot it fast and force your builds to fail-closed.

(Have feedback? Did something resonate with you? Did something annoy you? Just hit reply! :))

Have questions like this:

what the efff is slopsquatting????

Does it take a chunks out of your day? Would you like help with the research?

This baseline report was thoughtfully researched and took 10 minutes.. It's meant to be a rough draft for you to enhance with the unique insights that make you an invaluable analyst.

We just did the initial grunt work..

Are you ready to level up your skillset? Get Started Here!

Did this help you? Forward it to a friend!

TL;DR

Key Points

-

Assume AI tools will propose non-existent dependencies; ~20% of sampled AI outputs do, and 43% of those names repeat—making them targetable at scale.

-

Block unknown packages by default with internal proxies/allowlists and hash-pinned deps; make new names an exception, not a path of least resistance.

-

Watch for fresh npm/PyPI lookups, first-seen package installs, and build steps that fetch from public registries without provenance.

-

Instrument CI/CD to surface “new-to-org” dependencies as a policy event with human approval.

-

Plan for higher near-term risk in JavaScript/Python ecosystems over the next 30–90 days as adoption of code assistants grows.

The story in 60 seconds

-

Who/what/why: Slopsquatting abuses AI hallucinations—non-existent yet plausible package names produced by code assistants. Adversaries pre-register those names in public registries, then wait for devs or CI to auto-install them.

-

TTPs: Registration of plausible names; malicious postinstall/setup.py scripts; collection of environment variables and tokens; outbound beacons to attacker infrastructure; optional version pinning to survive dependency updates. Relevant ATT&CK: T1195 (Supply Chain), T1204 (User Execution), T1566 (Phishing, when lured via snippets), T1598 (Search Open Repositories), TA0005/TA0006 (Defense Evasion/Cred Exfil).

-

Sector impact: Highest exposure where JavaScript/Python dominate and build speed trumps review. Orgs with permissive registries and no “first-seen dependency” control face the most risk over the next quarter.

Why it matters

SOC

- Spike in DNS/HTTP(S) to registry.npmjs.org, pypi.org (or mirrors) for first-seen package names.

- npm install / pip install events in build agents or developer endpoints outside standard windows.

- Code execution from package postinstall/setup.py/entry_points during build or test stages.

IR

- Triage: identify the first build that pulled the malicious package; capture the package tarball/wheel and build logs.

- Preserve: CI job artifacts, lockfiles, .npmrc/pip.conf, resolver logs, and developer terminal history.

- Hunt: lateral movement or secrets exfil via install scripts (tokens, .npmrc, .pypirc, cloud creds).

SecOps

- Enforce allowlist proxy for registries; require human approval for new-to-org packages.

- Mandate lockfiles + hash pinning (npm package-lock.json/npm ci, Python pip-tools/--require-hashes).

- Turn on SCA (software composition analysis) with “first-seen dep” policy gates.

Strategic

- Make “AI-generated code safety” a board-visible risk in SDLC policy.

- Assign product owners for dependency hygiene and provenance.

- Require vendors to document how their AI assistants verify package existence.

See it in your telemetry

Network

- First-seen queries or fetches for package names not present in org allowlists.

- Short-lived HTTPS bursts to registry endpoints from CI runners right before build artifacts change.

- Downloads of .tgz (npm) or .whl/.tar.gz (PyPI) from newly created or low-reputation projects.

Endpoint (developer + build agents)

- Processes: npm install, npm ci, pip install, pipx, python setup.py spawning shells or network utilities.

- File mods: creation of new node_modules/

/ or /site-packages/ / immediately followed by script execution. - Secrets access: reads of .npmrc, .pypirc, cloud CLI creds right after install.

Code/CI logs

- “Package not in lockfile,” “No matching hash,” or resolver fallbacks to latest.

- First-time dependency approvals (or lack thereof) tied to a specific MR/PR.

- Build steps pulling directly from the internet instead of internal cache/proxy.

High Impact, Quick Wins

-

Put a gate in front of registries: Route npm/PyPI through an internal proxy with allowlisting and signed artifact caching. Measure: % of builds fetching only from internal cache; count of blocked first-seen names.

-

Make unknown == fail-closed: Enforce lockfiles + hash pinning (npm ci, pip --require-hashes) and block builds that add deps without an approval tag. Measure: build-failure rate due to policy and time-to-approval.

-

Surface “first-seen dependency” as a signal: Add a CI policy that creates a ticket and requires reviewer sign-off for any new package. Measure: MTTA from alert to decision; number of new deps per release.

Suggested Pivots

-

How do specific AI code generation models such as (SUBSCRIBE TO UNLOCK!) compare in their rates and patterns of hallucinating non-existent package names, and what fine-tuning or hallucination detection algorithms have proven most effective in reducing slopsquatting risks within these models?

Justification: Given the 20% prevalence of hallucinated packages and the 43% repeatability rate across AI runs, understanding model-specific vulnerabilities and mitigation techniques is critical for targeted defenses. -

Which public package repositories—specifically (SUBSCRIBE TO UNLOCK!) are most frequently targeted by slopsquatting attacks, and what automated monitoring techniques (e.g., anomaly detection, semantic similarity analysis, registration timing patterns) provide the highest accuracy and timeliness in detecting malicious registrations? How can these detection systems be integrated into CI/CD pipelines for real-time prevention?

Justification: Attackers exploit rapid registration of hallucinated names in these repositories; thus, repository-specific detection strategies are essential to prevent supply chain compromise. -

What organizational (SUBSCRIBE TO UNLOCK!) have been shown in case studies or behavioral research to influence susceptibility to slopsquatting attacks, and what targeted training programs or cultural interventions most effectively reduce blind trust in AI-generated code dependencies?

Justification: Since developer trust in AI-generated code is a key enabler of slopsquatting, understanding and shaping human factors is vital for comprehensive risk mitigation.

Ready to level up your intelligence game?

Executive Summary

Slopsquatting is an emergent software supply chain threat that leverages the statistical outputs and hallucinations of large language models (LLMs) used in AI code generation tools. Unlike typosquatting or dependency confusion, slopsquatting targets package names that do not exist but are repeatedly suggested by AI assistants such as GitHub Copilot, ChatGPT, and CodeLlama. Research indicates that 20% of AI-generated code samples include non-existent packages, with 43% of hallucinated names recurring across multiple AI runs, making them predictable and attractive for adversaries.

Threat actors—ranging from opportunistic cybercriminals to APTs—monitor AI outputs and rapidly register these hallucinated names in public repositories (e.g., npm, PyPI), embedding malicious payloads. The attack surface is expanding rapidly due to the widespread adoption of AI-assisted development, especially in high-growth ecosystems like JavaScript and Python. Detection is challenging, as hallucinated names are semantically plausible and not simple misspellings.

Mitigation strategies include integrating advanced dependency scanning and real-time monitoring into CI/CD pipelines, fine-tuning AI models to verify package legitimacy, and training developers to recognize and report suspicious dependencies. Collaboration with AI tool vendors to implement hallucination detection and allowlisting is recommended. Organizations are advised to treat slopsquatting as a board-level risk and adopt secure SDLC practices that address AI-driven supply chain threats.

Short-term forecasts predict a surge in slopsquatting incidents targeting npm and PyPI, with attackers exploiting the repeatability and plausibility of hallucinated names. Long-term, slopsquatting is expected to evolve into a highly automated, hybrid attack vector, prompting regulatory responses and industry-wide adoption of AI risk management in software development pipelines.

Research

Definition and Unique Characteristics of Slopsquatting

- Slopsquatting is a supply chain attack where threat actors register software package names that do not exist but are "hallucinated" (i.e., invented) by AI code generation tools. When developers or automated systems use these AI-generated suggestions, they may inadvertently import malicious packages.

- Distinct from Typosquatting: Typosquatting relies on human typographical errors (e.g., "reqeusts" instead of "requests"). Slopsquatting, by contrast, exploits AI-generated, plausible-sounding package names that do not exist in official repositories.

- Distinct from Dependency Confusion: Dependency confusion attacks exploit the precedence of public over private packages with the same name. Slopsquatting targets entirely new, hallucinated names that are not present in any registry until registered by an attacker.

- Repeatability: Research shows that 43% of hallucinated package names are consistently repeated across multiple AI runs, making them predictable targets for attackers (Stripe OLT, 2025).

Attribution: Threat Actors, Motivations, and TTPs

- Threat Actors: Slopsquatting is accessible to a wide range of actors, from opportunistic cybercriminals to advanced persistent threat (APT) groups, due to the low barrier to entry and high potential impact.

- Motivations: Motivations include financial gain (via malware, ransomware, or cryptomining), espionage, or disruption of software supply chains.

- TTPs (Tactics, Techniques, and Procedures):

- Monitoring AI code generation outputs, developer forums, and public code repositories for hallucinated package names.

- Using automated tools or their own LLMs to generate lists of hallucinated names.

- Rapid registration of these names on public repositories (e.g., npm, PyPI).

- Embedding malicious payloads in these packages, which are then imported by unsuspecting developers or CI/CD pipelines.

- Underground Activity: While no major slopsquatting campaigns have been publicly attributed to specific threat groups, security vendors and researchers have observed increased chatter in underground forums about exploiting AI-generated package names (FOSSA, 2025).

- Related Incidents: In January 2025, Socket researchers identified a malicious npm package (@async-mutex/mutex) that typosquatted the legitimate async-mutex, showing how AI can amplify existing squatting risks (Stripe OLT, 2025).

Comparative Risk Assessment

-

Prevalence: A 2025 academic study found that 20% of 576,000 AI-generated Python and JavaScript code samples included non-existent packages, with open-source LLMs hallucinating at a higher rate (21.7%) than commercial models (5.2%) (FOSSA, 2025).

-

Impact: If a hallucinated package becomes widely recommended by AI tools and is registered by an attacker, the potential for widespread compromise is significant.

-

Detection Difficulty: Slopsquatting is harder to detect than typosquatting because the names are not simple misspellings but plausible, semantically convincing, and often persistent across multiple AI-generated outputs.

-

Incident Evidence: While no large-scale slopsquatting attacks have been widely reported as of mid-2025, the threat is gaining attention. Industry warnings and minor incidents (such as the @async-mutex/mutex npm case) demonstrate the feasibility and growing risk.

-

Comparison Table:

Attack Type Vector Exploits Detection Difficulty Prevalence (2025) AI-Enabled? Typosquatting Human error Misspellings Moderate High (Sonatype, 2024) No Dependency Confusion Registry precedence Name collision High Moderate (FOSSA, 2021) No Slopsquatting AI hallucination Nonexistent names Very High Rising Fast (FOSSA, 2025) Yes

AI Code Generation’s Impact

- AI code assistants (e.g., GitHub Copilot, ChatGPT, CodeLlama) are increasingly used in software development, and their hallucinations are persistent and repeatable.

- According to FOSSA, roughly 20% of generated code samples from various AI coding models included at least one recommended package that didn’t actually exist. Crucially, these aren’t all random one-offs—a majority of the fake names recurred frequently. Over 58% of hallucinated package names re-appeared in multiple runs, and 43% showed up consistently across ten different attempts with the same prompt (FOSSA, 2025).

- The risk is amplified by the trust developers place in AI-generated code and the speed at which hallucinated names can be registered by attackers.

Lessons Learned from Documented Incidents

- Persistence and Repeatability: Hallucinated package names are not random; 43% of hallucinations reappeared in 10 successive AI runs, making them reliable targets for attackers (Stripe OLT, 2025).

- Semantic Similarity: Hallucinated names often mimic legitimate packages, increasing the likelihood of developer trust.

- Proof-of-Concepts and Minor Incidents: While no major in-the-wild slopsquatting campaigns have been confirmed, academic demonstrations and minor incidents (e.g., malicious npm packages) validate the risk.

- Industry Warnings: Security vendors and researchers are increasingly warning about the potential for slopsquatting to become a major attack vector as AI adoption accelerates.

Detection and Mitigation Strategies

- Proactive Measures:

- Use internal proxies for all external package requests to centralize scanning, logging, and validation.

- Explicitly specify legitimate package names in prompts or code generation workflows.

- Instruct AI models to verify package legitimacy before suggesting imports.

- Fine-tune AI models with curated lists of known legitimate packages.

- Automated Scanning: Employ dependency scanners and runtime monitoring tools to detect and block suspicious or unverified packages (FOSSA, 2025).

- Human Oversight: Regularly review AI-generated code and dependencies, especially in critical or production environments.

- Vendor Recommendations: Employ dedicated tools to identify and mitigate malicious or suspicious open-source dependencies. Solutions like Endor Labs, Checkmarx, and Socket offer visibility into risky packages and help detect malicious behaviours early in the development lifecycle (Stripe OLT, 2025).

Recommendations, Actions, Suggested Pivots, Forecasts and Next Steps

(Subscribers Only)

![[DEEP RESEARCH] BadIIS Isn’t Enough: The IIS Module + HTTP Fingerprints That Catch SEO-Fraud Cloaking](/content/images/size/w600/2026/02/z-2.png)