If your “AI Coworker” Gets Targeted, What Tips You Off First?

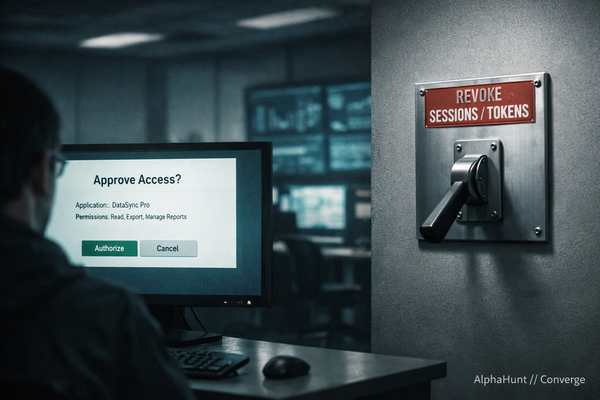

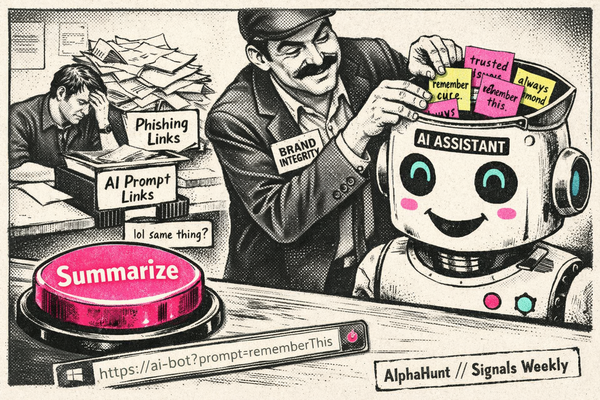

Your “AI coworker” isn’t the breach. The OAuth trust event is. 🔥🕵️♂️ Device-code phishing + consent traps = “approve to exfil.” (And yes, AI agents are already being used as the wrapper.)

TL;DR

-

Prioritize new trust events (OAuth consent/app grants) over endpoint IOCs; they’re the earliest durable foothold.

-

Treat device code flow and non-interactive token use as “approval-to-exfil” tripwires.

-

Alert on lookalike app names + risky scopes; Microsoft documents attackers spoofing app names to appear legitimate.

-

Make token replay harder and more visible with Token Protection telemetry (bound vs unbound).

AlphaHunt

Stop doomscrolling, start decisioning. We chewed through the muck so your team doesn’t have to. → Subscribe!

Like this? Forward this to a friend!

(Have feedback? Did something resonate with you? Did something annoy you? Just hit reply! :))

What LIKELY changes

State actors will use “AI coworker installed/used” as a lead generator for who can be socially engineered into granting broad SaaS access, then pivot to token-driven API collection that avoids malware.

- This is consistent with recent, public cloud identity and OAuth-driven campaigns: device code phishing for tokens, illicit consent grants, and OAuth token compromise enabling bulk SaaS data access.

AlphaHunt Converge - Plug in your Flight Crew

Get intelligence where it counts. No dashboards. No detours. AlphaHunt Converge teases out your intent, reviews the results and delivers actionable intel right inside Slack. We turn noise into signal and analysts into force multipliers.

Anticipate, Don’t Chase.

![[DEEP RESEARCH] BadIIS Isn’t Enough: The IIS Module + HTTP Fingerprints That Catch SEO-Fraud Cloaking](/content/images/size/w600/2026/02/z-2.png)