Dark LLMs: When Your AI Traffic Is C2

Your “normal” AI traffic can be stealth C2 now. Dark LLMs are writing per-host pwsh one-liners, self-rewriting droppers, and hiding in model APIs you approved. If you’re not policing AI egress, you’re not doing detection. 😬🤖

TL;DR

Key Points

- Detect agentic one‑liners and hourly self‑rewriting droppers that automate recon and collection while degrading signatures.

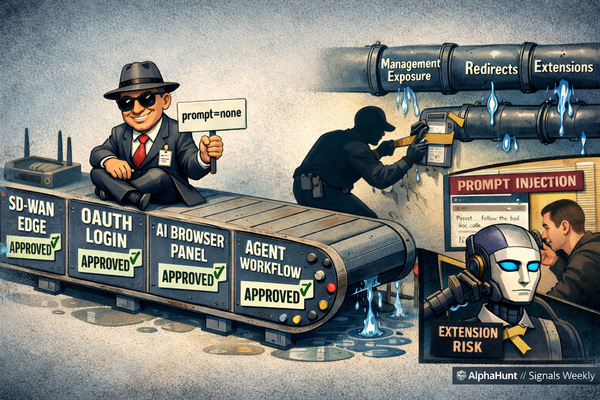

- Block unsanctioned AI/model API endpoints; govern API keys to disrupt “prompt‑proxy” C2 and exfiltration.

- Guard OAuth/app registrations and service principals to blunt cloud recon and mailbox and data exports.

Detections / Actions

- Enforce AI egress allowlists; bind, rate‑limit, and rotate API keys (IP/geo/time); revoke/rotate within 30 minutes. Metrics: zero unsanctioned AI egress; key‑revoke MTTR ≤30 minutes.

- Constrain scripting—PowerShell CLM (Constrained Language Mode), Script Block/Module Logging, AMSI (Antimalware Scan Interface), WDAC (Windows Defender Application Control), script signing. Metrics: ≥90% script logging; metamorphic detection ≤15 minutes.

- Require admin consent for all app registrations; disable external OAuth consent; apply CA (Conditional Access) and JIT (Just‑in‑Time) for service principals. Metrics: 100% app regs gated; zero external consents; rogue app disable ≤30 minutes.

The story in 60 seconds

E‑crime is operationalizing LLM‑in‑the‑loop tradecraft: malware synthesizes per‑host cmd/PowerShell (pwsh) one‑liners for discovery and collection, then regenerates on timers to evade static/ML detections. Stolen API keys and enterprise AI connectors provide covert rails disguised as normal model traffic—e.g., short, repetitive JSON exchanges to sanctioned AI/model API endpoints during recon and export windows. BEC quality rises via localized lures, thread hijacks, and compliance‑themed pretexts driving vendor‑bank‑change fraud.

Critical infrastructure impact concentrates on IT compromises that disrupt OT adjacency—engineering workstations, historians, and jump hosts—via identity abuse, weak segmentation, and ransomware pressure, not direct PLC manipulation.

AlphaHunt

Stop doomscrolling, start decisioning. We chewed through the muck so your team doesn’t have to. → Subscribe! • Forward to your on-call lead.

(Have feedback? Did something resonate with you? Did something annoy you? Just hit reply! :))

High Impact, Quick Wins

- Track unified KPIs: unsanctioned AI egress=0; key‑revoke MTTR ≤30 minutes; ≥90% script logging; app‑reg approvals=100%.

- Pre‑stage OT isolation runbooks; segment and harden jump hosts; maintain offline golden images for HMI/engineering.

- Gate vendor bank changes with out‑of‑band voice verification and dual approval.

Why it matters

SOC

- Detect rapid PowerShell chains with high‑entropy arguments performing ≥3 admin actions in ≤5 seconds.

- Detect hourly rewrites at a stable path where hashes change but imported APIs remain stable.

- Flag server or service‑principal traffic to sanctioned AI/model API endpoints and short, repetitive JSON request/response pairs.

IR

- Forensically image engineering workstations and jump hosts; preserve Script Block/AMSI logs and scheduled task histories.

- Correlate consent events → token spikes → list/get storms → mailbox export jobs within 24 hours.

- Collect provider billing/quota telemetry and OAuth/app‑consent logs to timeline API key abuse.

SecOps

- Apply TL;DR controls: enforce AI egress allowlists and API key governance; enforce OAuth/app‑reg guardrails; constrain scripting with CLM/AMSI/WDAC and script signing.

- Block LOLBins from user‑writable paths; standardize PowerShell logging baselines.

- Align change control to sanctioned AI/model API endpoints and approved connectors.

Strategic

- Fund OT adjacency hardening and isolation drills; validate recovery from offline images.

- Institutionalize dual‑approval, out‑of‑band verification for vendor bank changes.

- Review third‑party AI connectors for scope minimization and consent governance.

See it in your telemetry

Network

- Alert on >100 short (<2 KB) JSON calls/hour from server or service‑principal identities off‑hours to sanctioned AI/model API endpoints.

- Flag SNI/DNS lookups to sanctioned AI/model API endpoints from atypical subnets; investigate new geos/IPs on API keys.

- Correlate OAuth admin‑consent events → ≥3× token spikes → list/get storms → mailbox/storage export jobs within 24 hours.

Endpoint

- Flag sequences where PowerShell performs ≥3 admin actions (registry, archive, share/WMI, egress) in ≤5 seconds with high‑entropy arguments.

- Detect scheduled tasks/services that rewrite the same script every 30–90 minutes with changing hashes but stable imported APIs.

- Block or alert on LOLBin execution (e.g., regsvr32, rundll32, msbuild) from user‑writable paths.

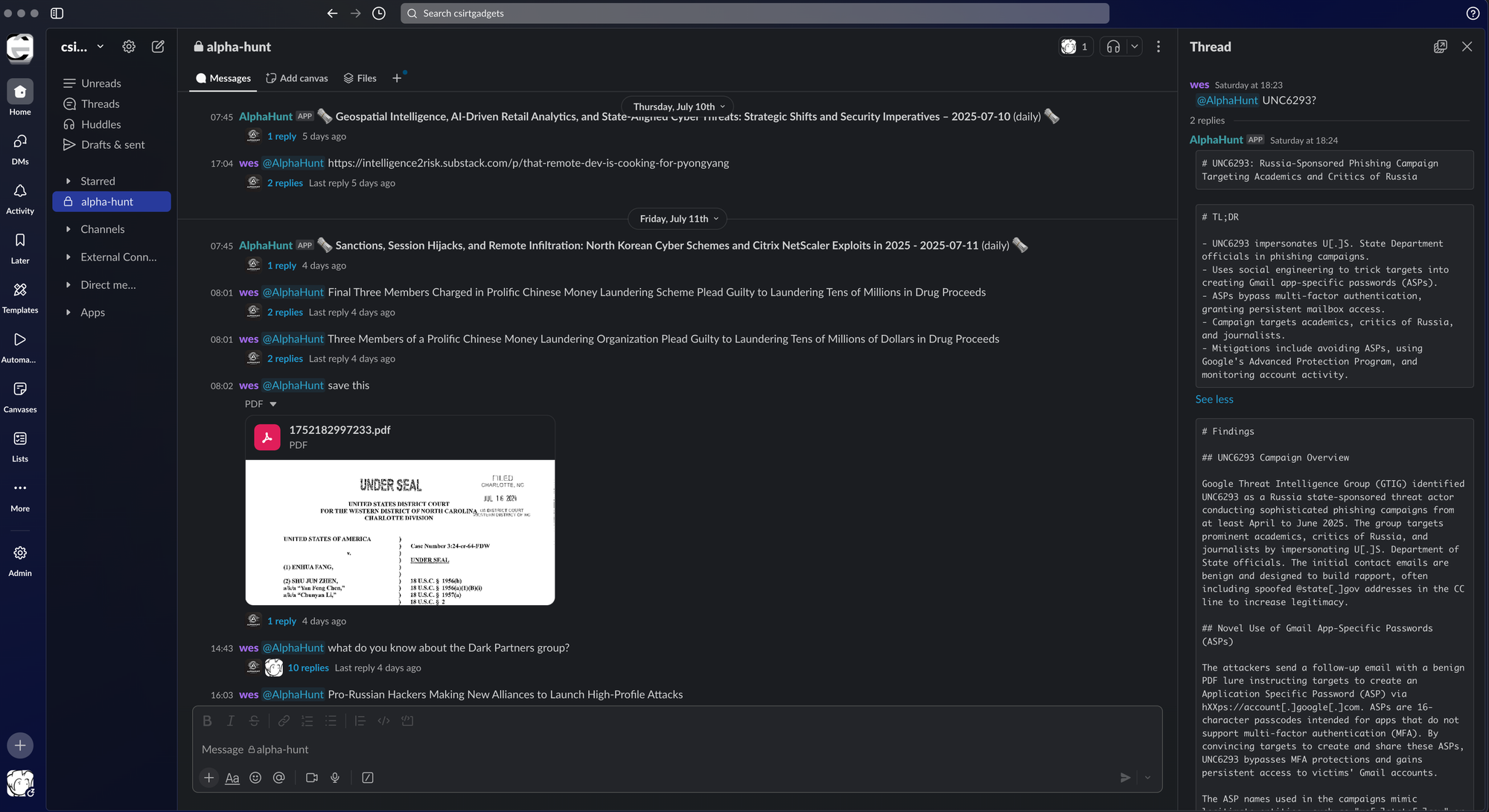

AlphaHunt Converge - Plug in your Flight Crew

Get intelligence where it counts. No dashboards. No detours. AlphaHunt Converge teases out your intent, reviews the results and delivers actionable intel right inside Slack. We turn noise into signal and analysts into force multipliers.

Anticipate, Don’t Chase.

RESEARCH: Dark LLMs in 2026: e‑crime’s agentic turn against US critical infrastructure

TL;DR

- Dark LLMs are shifting from static “content helpers” to agentic chains that synthesize commands at runtime and iterate post-execution based on feedback.

- Underground markets are productizing malicious LLMs and selling stolen AI API keys; covert use of mainstream AI endpoints is becoming a preferred C2/exfil path.

- BEC and access-broker playbooks are fusing LLMs with phishing/PhaaS, OTP-bypass, and cloud recon to accelerate IT compromise that pressures OT dependencies.

- Defenders should rate-limit and scope AI tokens; block unsanctioned AI endpoints; hunt for one-liner synthesis and metamorphic scripts; and pre-stage OT isolation.

Scope and Audience

- Audience: Strategic/technical principals in US critical infrastructure sectors (energy, water/wastewater, healthcare, manufacturing, transportation, financial services).

- Focus: Financially motivated e‑crime; 2026 trajectory, with concrete ATT&CK mapping, detection opportunities, and sector-specific implications.

What’s Already Changing (late‑2024 → 2025 baselines)

- AI-aided obfuscation and lure quality are real but incremental.

- Microsoft detected a phishing campaign whose SVG payload was likely LLM-generated, using verbose “business-analytics” constructs and synthetic naming to obfuscate redirection and session tracking; blocked via behavior/infrastructure analytics, not just content inspection.

- Criminal marketplaces for AI tooling are maturing.

- First-party analysis documents illicit AI tools marketed for phishing, malware scaffolding, and recon, mirroring SaaS tiers/pricing, and lowering skill barriers for affiliates.

- Operational use of LLMs inside malware has begun.

- Google reported first-in-wild cases where malware queries an LLM at runtime to generate one-line commands for discovery and document collection (PROMPTSTEAL) and experiments in self-rewriting droppers (PROMPTFLUX) to evade static signatures.

- Fake AI websites as malvertising distribution.

- Persistent campaigns abused “AI-themed” lures to deliver stealers/backdoors at scale, with rotating domains/ads and multi-stage loaders and side-loading chains.

2026 Evolution Forecast: How Dark LLMs Will Concretely Scale e‑crime

...

![[DEEP RESEARCH] Who’s Most Likely to Abuse MCP Integrations? UNC3944, TraderTraitor, UNC6293](/content/images/size/w600/2026/03/z.png)

![[FORECAST UPDATED] AI Agents as Regulated C2: Will Anyone Be Forced to Act?](/content/images/size/w600/2026/02/z-11.png)

![[FORECAST] Fortune 500s: Will Prompt Injection Trick IDE Agent Mode into Running Commands—or Leaking Secrets—by 2026?](/content/images/size/w600/2026/02/z-10.png)