AI Agents as Regulated C2: Will Anyone Be Forced to Act?

AI just ran most of an espionage op, and regulators are still in “interesting case study” mode. 😏 We’re forecasting: 55% odds that by 2026, someone will force signed AI connectors + agent logs by default.

Strategic Overview

Question

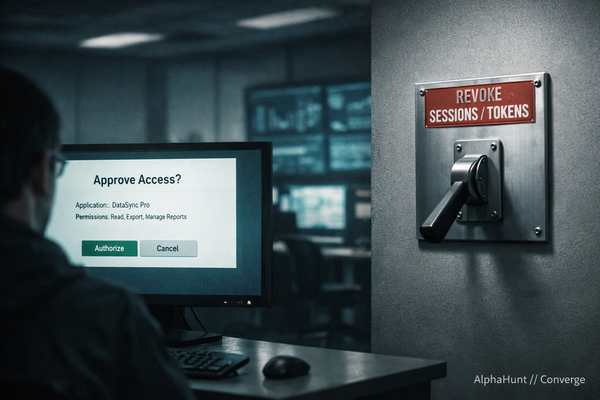

By 31 December 2026, will at least one major regulator or hyperscale SaaS/identity platform (e.g., SEC, FTC, EU DSA/DORA regulator, Microsoft, Google, AWS, Okta) publish binding requirements or default technical controls (not just guidance) that explicitly:

- treat AI agents/connectors as high-risk integration points, and

- require signed/attested connectors and auditable agent logs,

while citing an AI-orchestrated campaign like GTG-1002/Anthropic as part of the justification?

Forecast

I estimate a 55% chance that by end‑2026, at least one major regulator or hyperscale SaaS/identity provider will introduce binding or default‑on controls that:

- explicitly classify AI agents/connectors as high‑risk integration points

- require signed/attested connectors and auditable agent‑action logs

- explicitly cite an AI‑orchestrated intrusion—such as Anthropic’s GTG‑1002 or a similarly described “AI‑agent‑led” or “AI‑orchestrated” campaign—as part of the rationale.

The narrowness of this conjunction keeps the probability modestly above, not far from, 50%. The most likely path is a major platform productizing default AI‑agent governance and using GTG‑1002‑style narratives in their justification.

AlphaHunt

Stop doomscrolling, start decisioning. We chewed through the muck so your team doesn’t have to. → Subscribe! • Forward to your on-call lead.

(Have feedback? Did something resonate with you? Did something annoy you? Just hit reply! :))

Forecast Card

(Subscribers only... SUBSCRIBE!)

![[FORECAST] Dismantled or Displaced? Cambodia’s Scam-Compound Crackdown by 2030?](/content/images/size/w600/2026/02/z-7.png)